The goal of this project was for our team of IxD.ma students, consisting of Zac Heisey, Inka Jerkku & Sofia Kamergorodski, to help two alumni of IxD, the team at Mental Pin to integrate artificial intelligence into their mobile application, which helps people handle their anxiety and OCD ticks. While at first, we thought that it was another “keeping up with the craze” project, the result turned out different.

Research

We delved deep into the world of artificial intelligence combined with mental health. This was at the time as large language models (mainly ChatGPT) were first blooming and everybody was getting into language models. Needless to say, we found a bunch of amazing experiences with people using ChatGPT as their assistant to using it as a place to vent, yet we also found people who had caused more harm than good by putting their thoughts into a lifeless machine.

We did 6 semi-structured user interviews, 4 of which were people who were on Mental Pin’s waiting list & 2 people shared their contacts in a Google Form that we sent out to gather some qualitative data. The interviews went very well – we were shocked as to how open people were about their mental health struggles and also how open they were to looking towards technology to help them on their journey.

However, we did not stop there. As we were all slightly skeptical of artificial intelligence taking over mental health solutions, we also explored some out-of-the-box ideas.

Exploring & defining

As said, since we were quite skeptical of AI at first, we looked to some out-of-the-box ideas. We did lots of crazy 8s but had a few great ideas from the beginning. For example, an “analog” unboxing experience instead of a digital one, as Mental Pin’s usage revolved around an analog device and the application was not mandatory. We wanted to make it feel less like a chore and more like an act of self-care.

Here is one of the examples we found on the internet of a lovely unboxing experience:

We also considered gamifying the application, because that is what most apps seem to be doing today. When thinking about this, we thought of Duolingo as an example.

As we were trying to slowly but surely diverge away from the AI principle, we had the feeling that we might have to kill our babies in the sense that however cool these initial ideas might seem, we still have to look into how to exactly integrate a language model or something to the application. And that is exactly what happened.

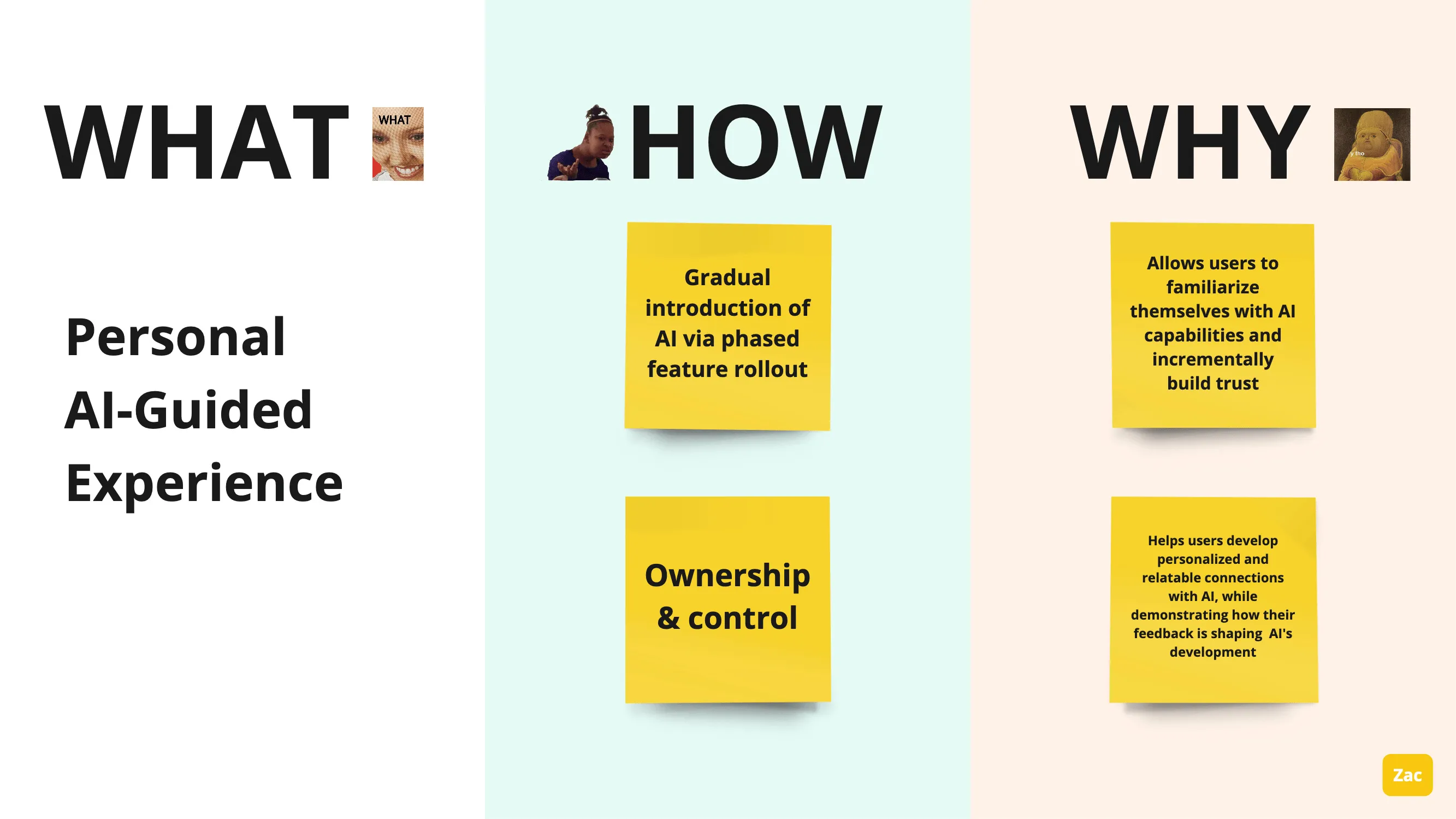

We presented the four ideas, which turned out to be the following:

- – A physical/analog journal

- – A tasks & rewards system in the application

- – Peer support (creating another type of social media)

- – A personal AI-guided experience

We still had to follow up with the ones that were most exciting for Mental Pin’s team, the AI-guided experience and the tasks and rewards system.

Here are a few slide examples from the presentation to Mental Pin’s team about our main ideas:

Results

We then delved deep into continuing with the two aforementioned ideas. For this, we made a Figma board to prototype the ideas in the mobile app. I was mostly in charge of Figma-related things because my teammates weren’t as proficient with it. I mean, sure, neither was I, but I was very willing to jump right in and spend time with it as it’s one of the tools of the trade.

We split the application into three rough parts:

- – The onboarding

- – Episode annotation

- – Tasks & Rewards system (which was split into multiple parts)

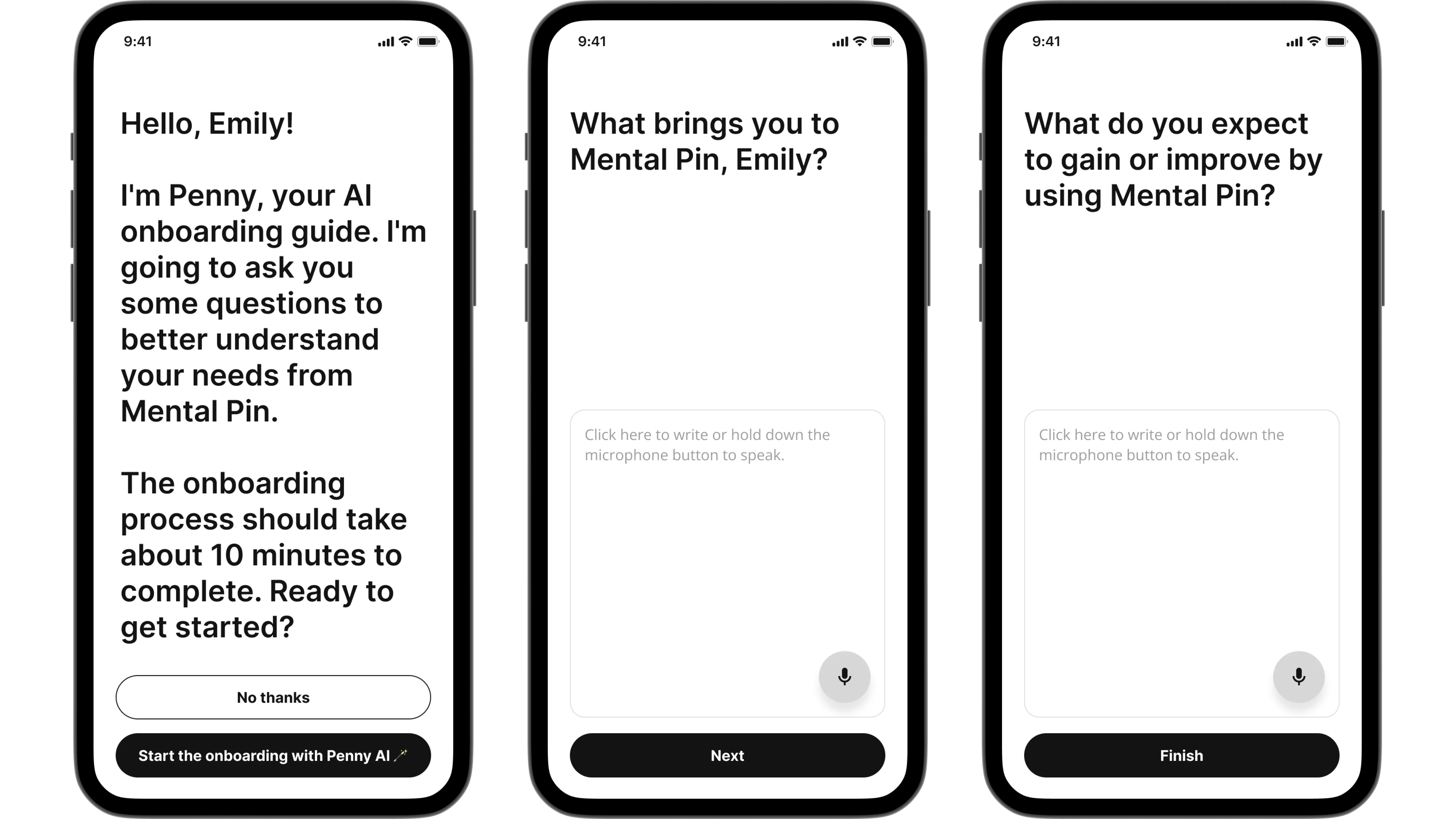

Here are some screenshots of the application we designed, e.g from the application in the onboarding phase. We also created a ChatGPT-esque animation, where the questions appear a few words at a time instead of all at once:

And some screenshots from the episode annotation phase:

As the above two were mostly topics, which I spent the most time on, I will explain them a bit further. For the onboarding, we received inspiration, as said, from ChatGPT. The questions were going to be contextual, so that Penny, the onboarding assistant learns from whatever the user is answering.

For the episode annotation, I, sort of out-of-the-blue, came up with a Grammarly-like idea: you can either type or use speech recognition totalk about your episode, and then the application will run automatic sentiment analysis so you wouldn’t have to go through the trouble of annotating everythingyourself.